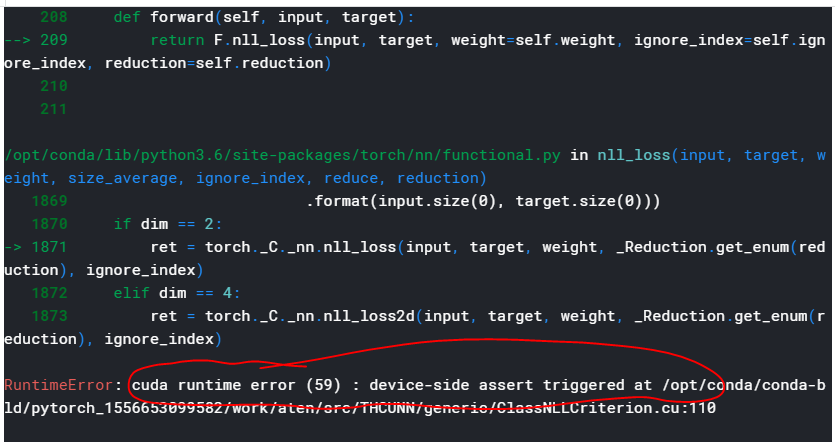

Requesting more shared memory per block than the device supports will trigger this error, as will requesting too many threads or blocks. This indicates that a kernel launch is requesting resources that can never be satisfied by the current device. The requested device function does not exist or is not compiled for the proper device architecture. Although this error is similar to cudaErrorInvalidConfiguration, this error usually indicates that the user has attempted to pass too many arguments to the device kernel, or the kernel launch specifies too many threads for the kernel's register count. This indicates that a launch did not occur because it did not have appropriate resources. All existing device memory allocations are invalid and must be reconstructed if the program is to continue using CUDA. The device cannot be used until cudaThreadExit() is called. This can only occur if timeouts are enabled - see the device property kernelExecTimeoutEnabled for more information. This indicates that the device kernel took too long to execute. Device emulation mode was removed with the CUDA 3.1 release. Deprecated: This error return is deprecated as of CUDA 3.1. This was previously used for device emulation of kernel launches. This indicated that a previous kernel launch failed. Common causes include dereferencing an invalid device pointer and accessing out of bounds shared memory. The API call failed because the CUDA driver and runtime could not be initialized.Īn exception occurred on the device while executing a kernel. The API call failed because it was unable to allocate enough memory to perform the requested operation. The device function being invoked (usually via cudaLaunch()) was not previously configured via the cudaConfigureCall() function. In the case of query calls, this can also mean that the operation being queried is complete (see cudaEventQuery() and cudaStreamQuery()).

0 kommentar(er)

0 kommentar(er)